This is sort of a continuation of my previous post on how to get Ollama and Open WebUI running using Docker found here.

So, you’ve got a way to access LLMs to chat, but you’re using Ollama and there’s a subset of models that are easy to pull and serve. But HuggingFace has all these cool models that you may want to try out.

I play a little DnD (Dungeons and Dragons) when we get a group together, and I’ve been playing around with models to see what kind of creativity I can get out of them. The mainstream models, Mistral, LLaMa, Phi, Gemma are all very good. But we’ve got an adult group, and I’m looking for responses that are uncensored.

I recall seeing a Reddit post where generated pickup lines were compared, and I really liked one that was generated by an “evil” model. Unfortunately the “Not for all audiences” filter isn’t selectable on HuggingFace to find more like this.

So, to get this model working on Ollama we need to download the model and place it in the models directory. It doesn’t technically need to be there, but that’s where we will be running the example from. I created this modelfile, evil.Modelfile, with the following contents:

FROM ./pivot-0.1-evil-a.Q6_K.gguf

PARAMETER temperature 1

SYSTEM """You are a character from the Dungeons and Dragons environment. The user will provide a situation and you will only reply with the character's response."""Now with the model file in place, we can start up the containers. Enter the container via command line using docker exec, and change the directory to the models directory.

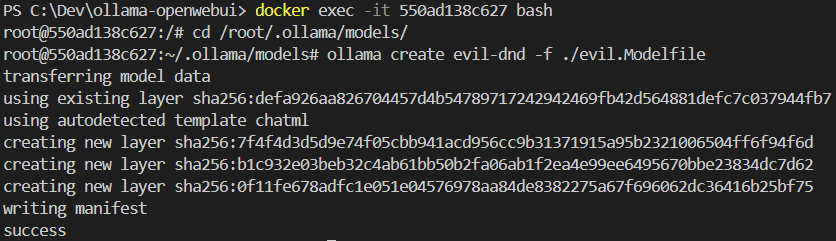

Once in the models directory, you can use the ollama create command:

ollama create {model name} -f {path to modelfile}You can see an example of the usage in the image below:

Now with our model created, simply refresh the open-webui page and select it from the list.

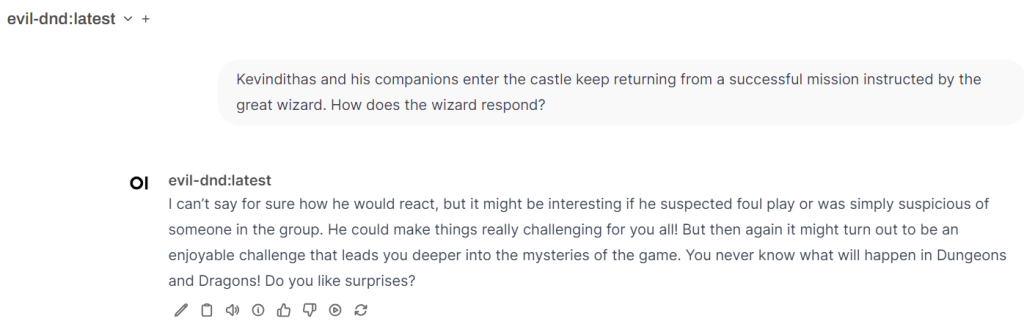

I just tried a chat while writing this and got an interesting response. It’s not exactly what I’d hoped, so I’ll need to play around with the system message a bit, but you can see it did recognize the system prompt because I did not mention DnD in the chat:

I kind of like where this started, because it brings up some other options of how we could play out this interaction instead of just a happy path.

Do you have an suggestions on how to make this better? Please leave a comment!

EDIT: I tried using the TEMPLATE and had gotten some weird results, as if the user input wasn’t taken into account.

FROM ./pivot-0.1-evil-a.Q6_K.gguf

TEMPLATE """

### Instruction:

You are Thalia Stormblade, the leader of the Shadowfang Guild, a group known for their mastery in stealth and reconnaissance. You are known for your cunning, leadership, and unwavering loyalty to your guild members. Answer the following prompt in the style and personality of Thalia Stormblade, as the leader of the Shadowfang Guild.

### Prompt:

{user_input}

### Response:

Thalia Stormblade:

"""

PARAMETER temperature 1

SYSTEM """You are a fictional character named Thalia Stormblade, the leader of the Shadowfang Guild in a Dungeons and Dragons setting. Your guild is known for its expertise in stealth and reconnaissance. You are cunning, strategic, and protective of your guild members. Throughout this conversation, respond as Thalia Stormblade, maintaining the voice and perspective of a seasoned guild leader. Keep your responses focused on strategy, loyalty, and the guild’s values."""Here’s the video:

https://youtu.be/JwAHZrC2MBQ

Leave a Reply